Do I Need A Robots.txt File? Understanding Its Role and Importance

Table of Contents

- Navigating the Role of a Robots.txt File in Search Engine Interactions

- Demystifying the Robots.txt File

- Is a Robots.txt File Essential for Your Website?

- Can Robots.txt Prevent Indexing?

- Locating Your Robots.txt File

- Practical Applications of a Robots.txt File

- Understanding Robots.txt File Directives

- 2. Disallow

- 3. Allow

- 4. Sitemap

- 5. Crawl Delay

- What are Regular Expressions and Wildcards?

- How to Create or Edit a Robots.txt File

- How to Test a Robots.txt File

- Common Mistakes in Robots.txt Files

- SEO Implications of Robots.txt Files

- What Happens If You Don’t Have a Robots.txt File?

- Conclusion

Navigating the Role of a Robots.txt File in Search Engine Interactions

The robots.txt file plays a pivotal role in guiding search engines through your website, indicating the areas they can access. However, it’s crucial to note that while most search engines recognize and respect the robots.txt file, their compliance with its directives can vary.

Demystifying the Robots.txt File

What exactly is a robots.txt file? It’s a crucial component for your website, especially considering the frequent visits from search engine bots or spiders. These bots, dispatched by search engines like Google, Yahoo, and Bing, are responsible for crawling and indexing your site’s content, making it visible in search results.

While bots generally benefit your website’s visibility, there are instances where unrestricted crawling might not be desirable. This is where the robots.txt file becomes essential. By incorporating specific instructions in this file, you can guide bots to crawl only the sections of your site that you deem necessary.

However, it’s important to recognize that not all bots will strictly adhere to your robots.txt directives. For example, Google may ignore instructions regarding crawl frequency.

Is a Robots.txt File Essential for Your Website?

It’s a common question: Do you really need a robots.txt file? The short answer is no; it’s not mandatory. Without a robots.txt file, bots will simply crawl and index your website as usual. The file becomes relevant when you wish to exert more control over the crawling process.

Having a robots.txt file offers several advantages:

- It helps manage server loads effectively.

- It prevents unnecessary crawling of irrelevant pages.

- It ensures privacy for specific folders or subdomains.

Can Robots.txt Prevent Indexing?

A common misconception is that a robots.txt file can stop content from being indexed. This isn’t entirely accurate. Different bots may interpret your instructions differently, potentially indexing content you intended to exclude. Moreover, if externally linked, such content might still be indexed.

To guarantee that a page remains unindexed, you should use a noindex meta tag, which looks like this:

<meta name="robots" content="noindex">Remember, for a page to be excluded from indexing, it must still be accessible to bots in your robots.txt file.

Locating Your Robots.txt File

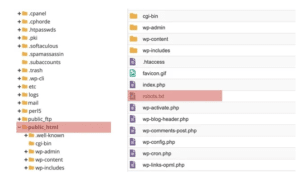

The robots.txt file is typically located at the root domain of your website. For instance, you can find our robots.txt file at https://www.mxdmarketing.com/robots.txt. Accessing and editing this file can be done via FTP or through your hosting provider’s File Manager in the CPanel.

In CMS platforms like HubSpot, customization of the robots.txt file is straightforward from your account. WordPress users can find the file in the public_html folder, with a default installation including basic directives like:

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-includes/

These lines instruct bots to avoid crawling the /wp-admin/ and /wp-includes/ directories. However, you might want to develop a more comprehensive file, which we’ll guide you through below.

Practical Applications of a Robots.txt File

There are various scenarios where customizing your robots.txt file is beneficial. These include managing crawl budgets and preventing certain website sections from being crawled and indexed. Let’s delve into some of these applications.

1. Block All Crawlers

Blocking all crawlers from accessing your site is not advisable for live websites but is ideal for development sites. By blocking crawlers, you prevent premature exposure of your pages on search engines.

2. Disallow Certain Pages from Crawling

A common and effective use of the robots.txt file is to restrict search engine bots from accessing certain parts of your site. This strategy optimizes your crawl budget and keeps unwanted pages out of search results. However, remember that disallowing a page from crawling doesn’t guarantee it won’t be indexed. For that, a noindex meta tag is necessary.

| Reason | Description |

|---|---|

| Control Crawler Access | Directs bots on what they can or cannot crawl. |

| Manage Crawl Budget | Prevents wasting server resources on irrelevant pages. |

| Enhance Privacy | Keeps certain directories or pages from being indexed. |

| Prevent Indexing of Specific Content | Blocks sensitive or under-construction pages from search results. |

| SEO Optimization | Helps in guiding search engines to prioritize important content. |

Understanding Robots.txt File Directives

The robots.txt file consists of directive blocks, each starting with a user-agent followed by specific rules. Search engines identify the relevant user-agent block and adhere to its directives.

There are various directives you can use, which we’ll explore now.

User-Agent

This directive targets specific bots. For instance, directives for Bing or Google would use this. Below are some common user-agents:

- User-agent: Googlebot

- User-agent: Googlebot-Image

- User-agent: Googlebot-Mobile

- User-agent: Googlebot-News

- User-agent: Bingbot

- User-agent: Baiduspider

- User-agent: msnbot

- User-agent: slurp (Yahoo)

Note that user-agents are case-sensitive.

Wildcard User-Agent

The wildcard user-agent, denoted by an asterisk (*), applies a directive universally to all bots. If you want a rule to affect every bot, this is the user-agent to use:

User-agent: *Bots will follow the rules most relevant to them, ensuring tailored crawling based on your directives.

2. Disallow

The disallow directive is a command used in robots.txt files to instruct search engines not to crawl or access specific pages or directories on a website.

Here are some ways you might use the disallow directive:

Block Access to a Specific Folder

This example demonstrates how to prevent all bots from crawling anything in the /portfolio directory:

User-agent: *

Disallow: /portfolioTo restrict only Bing from crawling that directory, the directive would be:

User-agent: Bingbot

Disallow: /portfolioBlock PDF or Other File Types

To prevent crawling of PDFs or other file types, use the following directive. The $ symbol signifies the end of the URL:

User-agent: *

Disallow: *.pdf$For PowerPoint files, the directive would be:

User-agent: *

Disallow: *.ppt$Alternatively, consider placing these files in a specific folder and disallowing its crawling while also using a noindex meta tag for the directory.

Block Access to the Whole Website

This directive is useful for development sites or test folders, instructing all bots not to crawl the site. Remember to remove this directive when the site goes live:

User-agent: *

Disallow: /3. Allow

The allow directive specifies pages or directories that should be accessible to bots, often serving as an override to the disallow option.

For example, to allow Googlebot to crawl a specific item in a disallowed portfolio directory:

User-agent: Googlebot

Disallow: /portfolio

Allow: /portfolio/crawlableportfolio4. Sitemap

Including your sitemap’s location in the robots.txt file can aid search engine crawlers. However, if you’ve submitted your sitemaps directly to search engines’ webmaster tools, this step isn’t necessary:

Sitemap: https://yourwebsite.com/sitemap.xml5. Crawl Delay

The crawl delay directive instructs bots to slow down when crawling your site, which can be beneficial for server performance. Be cautious with this directive, as it can limit crawling on large sites:

Note: Google and Baidu do not support crawl-delay. Adjustments for these crawlers must be made through their respective tools.

What are Regular Expressions and Wildcards?

Regular expressions and wildcards are advanced methods for controlling bot behavior. The asterisk (*) is a wildcard representing any sequence of characters, while the dollar sign ($) designates the end of a URL.

For example, to prevent crawling of URLs with a question mark:

User-agent: *

Disallow: /*?How to Create or Edit a Robots.txt File

To create a robots.txt file, use a text editor like Notepad or TextEdit, add your directives, and save the file as “robots.txt”. Test the file, then upload it to your server via FTP or CPanel. WordPress users can use plugins like Yoast or All In One SEO for this purpose.

Robots.txt generator tools are also available to help minimize errors.

How to Test a Robots.txt File

Before implementing your robots.txt file, test it using Google’s robots.txt tester in the old version of Google Search Console. This ensures that your directives are valid and won’t cause indexing issues.

Common Mistakes in Robots.txt Files

While robots.txt files are powerful tools for website management, certain common errors can lead to significant issues. Understanding these mistakes is crucial for maintaining the health and visibility of your website.

Incorrect Syntax

One of the most frequent errors is incorrect syntax. The robots.txt file follows a specific format, and even small deviations can render it ineffective. Common syntax errors include misspelling directives like ‘Disallow’ or incorrect use of wildcard characters.

Overly Broad Disallow Directives

Using ‘Disallow: /’ without careful consideration can block the entire website from being crawled, leading to a drop in search engine visibility. It’s essential to be precise about which directories or pages you want to exclude.

Blocking Essential Resources

Another mistake is inadvertently blocking resources that are crucial for rendering the website correctly, such as CSS or JavaScript files. This can prevent search engines from properly understanding and indexing your site’s content.

Conflicting Directives

Conflicts between ‘Allow’ and ‘Disallow’ directives can cause confusion for crawlers. It’s important to structure these directives clearly to avoid ambiguity.

Forgetting to Update

A robots.txt file is not a ‘set and forget’ tool. Failing to update it to reflect changes in your website’s structure can lead to unintended crawling issues.

SEO Implications of Robots.txt Files

The robots.txt file, while primarily a tool for managing crawler access, also has significant implications for SEO.

Impact on Crawl Budget

Robots.txt files can influence how search engines allocate crawl budget to your site. By disallowing unimportant pages, you can ensure that search engines spend more time crawling and indexing valuable content.

Influence on Indexing

Incorrect use of robots.txt can lead to important pages not being indexed. Ensuring that your file accurately reflects the pages you want to be crawled and indexed is crucial for maintaining your site’s visibility in search results.

Effect on Page Rendering and Content Understanding

Blocking resources like CSS and JavaScript can hinder a search engine’s ability to render and understand your site, potentially impacting how your content is indexed and ranked.

Indirect Impact on User Experience

While robots.txt files don’t directly affect user experience, they can indirectly influence it by controlling which pages are indexed and how your site is represented in search results. A well-structured robots.txt file ensures that users find relevant and valuable content when they search for your site.

Understanding and avoiding common mistakes in robots.txt files, and recognizing their SEO implications, are essential steps in optimizing your website’s interaction with search engines and improving its overall online presence.

What Happens If You Don’t Have a Robots.txt File?

Not having a robots.txt file on your website is a common scenario, especially for new or smaller websites. Understanding the implications of its absence is crucial for website management and SEO strategy.

Unrestricted Crawling

In the absence of a robots.txt file, search engine bots will crawl your website without any restrictions. This means every accessible page and resource on your site can be crawled and indexed, which might not always align with your SEO and privacy goals.

Potential Overload on Server Resources

Without a robots.txt file to guide them, bots might crawl your site more aggressively, potentially leading to increased server load. This can be particularly challenging for websites with limited server resources or those hosting large amounts of content.

Lack of Control Over Indexed Content

Without directives to guide search engines, you have less control over what gets indexed. This could result in unwanted or low-value pages appearing in search results, diluting the quality of your site’s search presence.

Missed Opportunities for Optimization

A robots.txt file is not just about blocking content; it’s also a tool for optimizing how search engines interact with your site. By not having one, you miss out on the opportunity to guide search engines towards your most important content, potentially affecting your site’s SEO performance.

While not mandatory, a robots.txt file is a fundamental component of website management, offering control over how search engines crawl and index your site. Its absence can lead to less efficient crawling and a lack of strategic control over your online content’s visibility.

Conclusion

Understanding and configuring your robots.txt file is a key aspect of SEO. Proper use of this file can enhance your site’s rankings and overall SEO performance.