How to Add Sitemaps to Robots.txt

Table of Contents

- Robots.txt

- XML Sitemaps

- How Are robots.txt & Sitemaps Interconnected?

- Adding Your XML Sitemap to Your Robots.txt File

- Step #1: Locate Your Sitemap URL

- Step #2: Locate Your Robots.txt File

- Step #3: Add Sitemap Location To Robots.txt File

- What If You Have Multiple Sitemaps?

- The Importance of Sitemaps and Robots.txt in SEO

- Best Practices for Updating Sitemaps in Robots.txt

Sitemaps are crucial for informing Google about the key pages on your website for indexing. Among various methods to generate a sitemap, incorporating it into your robots.txt file is highly effective in ensuring Google’s recognition.

As part of a digital marketing team or as a web developer, achieving visibility in search engine results is a primary goal. This visibility requires your site and its individual pages to be crawled and indexed by search engine bots.

Your website’s technical framework includes two essential files to guide these bots: Robots.txt and an XML sitemap.

Key Takeaways:

- Ensure your website has an XML sitemap and locate its URL, typically at /sitemap.xml.

- Find or create a robots.txt file in your site’s root directory to manage search engine crawling.

- Add a directive in the robots.txt file pointing to your sitemap URL to facilitate efficient indexing.

- Regularly update your sitemap and robots.txt, especially after significant changes to your site.

- Understand the role of sitemaps and robots.txt in SEO for improved search engine visibility.

Robots.txt

This is a basic text file located in your website’s root directory. It provides directives to search engine bots about which pages they can or cannot crawl on your site.

Robots.txt can also restrict certain bots from accessing your site. For instance, during a site’s development phase, it’s common to prevent bots from accessing the site until it’s fully prepared for public viewing.

Discover More About Robots.txt

Explore our comprehensive guide on robots.txt and SEO.

Robots.txt is typically the first stop for crawlers when they visit your site. Even if your intention is to allow all bots access to the entire site, it’s still advisable to have a robots.txt file that explicitly permits this.

Moreover, robots.txt files should mention the location of another crucial file: your XML Sitemap. This sitemap contains details of every page you wish search engines to find.

This guide will demonstrate how to correctly reference your XML sitemap in your robots.txt file. But first, let’s understand what a sitemap is and its significance.

XML Sitemaps

An XML sitemap is a file listing all the pages on your site that you want search engine robots to find and index.

For instance, you may want search engines to index all your blog posts to enhance their visibility in search results. However, you might exclude tag pages from indexing as they might not serve as effective landing pages.

Learn About XML Sitemaps

Delve into our detailed guide on XML sitemaps.

XML sitemaps can also provide additional details about each URL through metadata. Just like robots.txt, having an XML sitemap is vital not only for ensuring that search engine bots locate all your pages but also to convey the relevance of these pages.

Steps to Add Sitemap to Robots.txt

| Step | Action | Description |

|---|---|---|

| 1 | Locate Your Sitemap URL | Check if your website has an XML sitemap, typically found at /sitemap.xml. |

| 2 | Locate Your Robots.txt File | Find the robots.txt file in your site’s root directory or create one if it doesn’t exist. |

| 3 | Add Sitemap Location to Robots.txt | Edit the robots.txt file to include a directive pointing to your sitemap URL. |

How Are robots.txt & Sitemaps Interconnected?

In 2006, a collaborative effort by Yahoo, Microsoft, and Google led to the adoption of a standardized method of submitting website pages via XML sitemaps. Initially, you needed to submit your XML sitemaps through various webmaster tools like Google Search Console and Bing. Other search engines like DuckDuckGoGo derived results from Bing/Yahoo.

By April 2007, these platforms also supported identifying XML sitemaps via robots.txt, a feature known as Sitemaps Autodiscovery.

This development meant that even without direct sitemap submissions to search engines, your sitemap could be located via your site’s robots.txt file.

(Note: While direct sitemap submission is still an option, remember that Google & Bing are not the only search engines!)

Thus, the robots.txt file gained increased importance for webmasters, as it facilitates the discovery of all site pages by search engine bots.

Adding Your XML Sitemap to Your Robots.txt File

Follow these three easy steps to include your XML sitemap’s location in your robots.txt file:

Step #1: Locate Your Sitemap URL

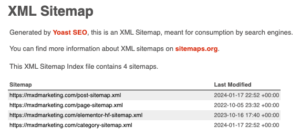

To begin, confirm if your website, possibly developed by a third-party, includes an XML sitemap. Typically, your sitemap URL will be something like /sitemap.xml. Replace ‘befound.pt’ with your domain to check, like so: https://yourdomain.com/sitemap.xml.

If your site has multiple XML sitemaps, a sitemap index (for example, /sitemap_index.xml) might be used. Additionally, you can try finding your sitemap via Google using search operators like “site:yourdomain.com filetype:xml”. This method, however, requires that your site is already indexed by Google.

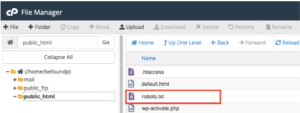

Alternatively, you can locate your XML sitemap file in your website’s File Manager. If a sitemap doesn’t exist, you can create one using tools like XML Sitemap generator or follow guidelines at Sitemaps.org.

Step #2: Locate Your Robots.txt File

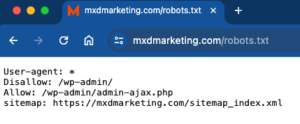

To check for a robots.txt file, simply append /robots.txt to your domain (e.g., https://yourdomain.com/robots.txt). If it’s missing, you’ll need to create one and place it in your web server’s root directory. Remember to name the file in all lower case as ‘robots.txt’.

If you’re unfamiliar with these processes, consider enlisting a web developer’s help.

Step #3: Add Sitemap Location To Robots.txt File

With access to your web server, open the robots.txt file at your site’s root. You can add a directive for your sitemap URL in the robots.txt, like this:

Sitemap: http://yourdomain.com/sitemap.xml

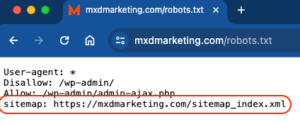

This directive can be placed anywhere in the robots.txt file and is independent of user-agent directives. To see an example, visit any website and append /robots.txt to its domain.

What If You Have Multiple Sitemaps?

In case your website is large, necessitating multiple sitemaps (each with no more than 50,000 URLs and under 50Mb), list all in a sitemap index file. This index acts as a sitemap of sitemaps.

In your robots.txt, you can either specify the URL of your sitemap index file or list URLs for each individual sitemap file:

Sitemap: http://yourdomain.com/sitemap_index.xml

Or,

Sitemap: http://yourdomain.com/sitemap_pages.xml Sitemap: http://yourdomain.com/sitemap_posts.xml

With this information, you should now be equipped to effectively integrate a sitemap into your robots.txt file, enhancing your website’s SEO visibility.

Have you located your sitemap in your robots.txt file yet?

The Importance of Sitemaps and Robots.txt in SEO

Sitemaps and robots.txt files are instrumental in guiding search engine crawlers through your website. A sitemap acts as a roadmap of your site, indicating which pages are crucial and should be indexed. This comprehensive list aids search engines in discovering and understanding the structure of your website, ensuring that important pages don’t get overlooked.

On the other hand, the robots.txt file functions as a gatekeeper, instructing search engines on which parts of your site can be crawled and indexed. It’s essential for managing crawler traffic, preventing overloading of your site’s server, and keeping certain pages private. Effectively using both sitemaps and robots.txt can significantly improve a website’s SEO performance by optimizing the crawling process and ensuring that the most important pages are easily found and indexed by search engines.

Best Practices for Updating Sitemaps in Robots.txt

Regularly updating your sitemap is crucial for maintaining an SEO-friendly site. Ensure that your sitemap is current whenever new pages are added, or old ones are removed. This practice helps search engines quickly discover and index new content. The frequency of updates largely depends on how often your site’s content changes. For dynamic sites, consider automating the sitemap update process.

When updating sitemaps in robots.txt, it’s important to verify that the sitemap URL is correct and accessible. If you’re using a sitemap index file due to multiple sitemaps, make sure all linked sitemaps are updated and listed correctly in the index. Remember, an outdated or inaccurate sitemap can hinder the efficiency of search engine crawlers, potentially impacting your site’s visibility and rankings.